A Modern Mirror: How Conversations Surrounding Artificial Intelligence Perpetuate Gender Bias

As Artificial Intelligence has become increasingly prevalent, there is a growing debate among researchers about its potential impacts on future societies, specifically as it relates to gender inequality and discrimination. Artificial Intelligence, or AI, is described as computer systems with varying levels of complexity that complete tasks by mimicking human intelligence (Healey, 2020). The use of AI has increased significantly in recent years. People rely on it more in their day-to-day lives, whether in the form of generative AI that provides informational resources, such as ChatGPT or Google Gemini, or smart home technology, such as Amazon Alexa or Google Home. Implementing AI has sparked debates on societal inequalities. Regardless of the stance researchers take, there are rhetorical biases within these debates that only further perpetuate the issues associated with gender inequality and prejudice. This chauvinism is evident in the negative portrayal of women in conversations surrounding visual aids and voice assistants, as well as a failure to recognize gender identities outside of the gender binary.

MIT Sloan’s article “Could AI Be the Cure for Workplace Gender Inequality” contains one example of biased rhetoric against women (Beck & Libert, 2017). While the article presents an explicit message of how AI could ultimately help lead to more gender equality, the visual representations of AI within the article contradict this by presenting an inherently biased outlook against women (Beck & Libert, 2017). The image (Figure 1) features the figure of an AI humanoid holding the figure of a miniature person in its hand. While readers could perceive the AI humanoid as genderless, it seems to have a more masculine figure based on its flat chest, physical “build,” and Adam’s apple-like protrusion in the form of hardware on its neck. The miniature person seems more feminine based on the hourglass body shape, clothing choice, and hair length/style. The fact that the image displays the than the AI humanoid “man” implies that the humanoid is more significant, and therefore superior to the “woman”. Furthermore, this image is positioned directly next to a quote: “We believe that AI has the ability to help level the playing field” (Beck & Libert, 2017). The proximity with which this quote is positioned next to the image suggests a connection between the two, and the phrase “playing field” implies a game of sorts. When applying that logic to the image, the “woman,” in this case, seems to signify a pawn or piece in a game, which players can easily manipulate. Additionally, this parallel drawn between the woman and a pawn presents a perspective that objectifies women, mainly because the AI humanoid “man” is shown to be in the role of a player who has control over the pawn. The fact that the AI humanoid “man” is holding the “woman” in the palm of its hand explicitly associates AI technology with the common phrase of holding someone in the palm of your hand.

Figure 1. AI Humanoid holding a miniature “woman” figure in its palm against a teal background.

This phrase is an alternate way to say that someone has complete control over another person. Communicating through visual representations is a powerful way to convey information online, as they often accompany or reinforce the body of an article (Nuckols, 2020). However, since the visual representation of MIT Sloan’s article conveys biased rhetoric against women, it directly contradicts the article’s explicit message of how AI could potentially help foster gender equality, which could mislead readers.

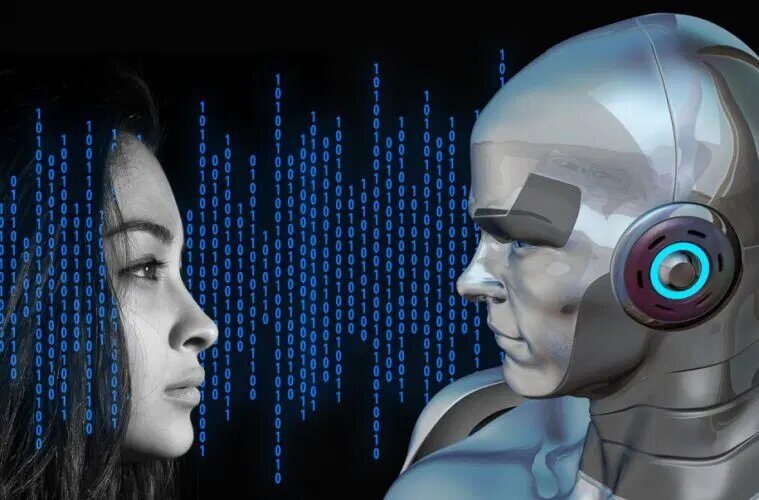

An article from Irish Tech News titled “Achieving Gender Equality Through AI” similarly communicates an implicitly biased narrative (McGuire, 2018). As expressed through the title, the author believes AI can be used to counter gender biases (McGuire, 2018). The headline’s use of the word “achieving” suggests a positive connotation in which gender equality is entirely possible with Artificial Intelligence because “achieving” is often related to success. However, an image included in the article contradicts this sentiment (McGuire, 2018). The image above the headline (Figure 2) consists of two figures facing each other (McGuire, 2018). The figure on the left seems more feminine because of the facial features, such as the fuller lips and jawbone structure, as well as the longer hairstyle. This feminine figure also has a human face, unlike the figure on the right. That figure seems more masculine because of its lowereyebrow positioning, bone structure, smaller lips, and the build of its shoulders.

Figure 2. Figure of a woman and silver-colored AI Humanoid facing each other with lines of binary code going across the woman figure’s face and the background.

The metallic, non-human look given to the exterior of the masculine figure suggests that it is supposed to represent Artificial Intelligence. The woman is positioned on the left side of the image, while the AI figure is on the right. Typically, things arranged on the left side of the page suggest the past, while anything positioned on the right side suggests looking forward in time (Zhang, 2018). This logic would imply that the masculine AI figure is the future to look forward to, while any importance the woman may have had will remain in the past. Furthermore, the binary code is beginning to cover part of the woman’s face, suggesting an engulfment and erasure of the woman by the masculine Artificial Intelligence technology. The suggestion that the masculine AI represents the future and superiority over the female, alongside the insinuation that the female figure symbolizes the past and inferiority, contradicts the headline’s optimistic message about achieving gender equality.

Researchers discussing the potentially problematic portrayal of women through voice assistants, which are specific types of AI that use speech recognition and natural language processing, have also unintentionally incorporated gender biases within their articles (Hulick, 2015). These biases result from the use of mixed language that both objectifies and feminizes the technology. One example of this is an article from the Brookings Institution (Villasenor, 2020). This article explicitly discusses the adverse effects of referring to voice assistants in human-like ways. It mentions how when Cortana (Microsoft’s voice assistant) was spoken to in a way that would be considered harassment, it “responded by reminding the user she is a piece of technology” (Villasenor, 2020). This sentence suggests that Cortana is a non-human object by using the word “piece,” which is an especially objectifying word. For example, this relates to the colloquial word “sidepiece,” which refers to a person physically objectified because they have a solely physical relationship with another person; they are stripped of their emotional worth in a degrading way that also reduces their humanity. However, the use of the word “responded” in terms of Cortana’s reactions insinuates that this AI is similar to humans in its ability to formulate thoughts because “responded” is a word often used to refer to people who have conscious autonomy over their thinking processes. Instead, it may be more appropriate to use a phrase such as “generated a response.” Using the word “generated” insinuates that inputs and outputs were involved, which is more suitable for conversations about technology. Additionally, the use of the word “she” is a gendered term that implies that Cortana is given a human-like femininity. This diction becomes especially problematic when considering the implications of the word “user” regarding Cortana. This word can be used in the context of manipulating and taking advantage of someone. This implication is therefore demeaning for women because Cortana is identified in this sentence as a woman and is simultaneously identified and objectified as something that can be easily manipulated and controlled. Furthermore, the human-like implications complicate the dehumanizing consequences of Artificial Intelligence in the same sentence.

An article focusing on Siri (Apple’s voice assistant) from the Computer Law & Security Review has a similar effect with its problematic rhetoric (Ni Loideain & Adams, 2020). This piece explicitly states that labeling voice assistants as female “may pose a societal harm.” The writers of the article denounce the feminization of voice assistants even though they continuously refer to Siri as “she” (Ni Loideain & Adams, 2020). The use of this humanizing, specifically feminizing pronoun about Siri gives that voice assistant a human-like (and woman-like) identity. As with Cortana, using the phrase “her users” implies that Siri can be easily manipulated and controlled (Ni Loideain & Adams, 2020). Additionally, the subsequent use of the word “programmed” insinuates that Siri was made to be submissive and denies any autonomy for the AI (Ni Loideain & Adams, 2020). The use of “programmed” shows that Siri was given specific instructions that it must generate a reaction to in a certain way. These otherwise harmless terms used to describe non-human pieces of technology suddenly hold offensive undertones when the voice assistants have been referenced as human women with the pronoun “she.” Equating these easily–and intentionally–manipulated voice assistants with women reveals an implicitly biased view of human women as objects that are rightfully able to be manipulated and who lack autonomy. When framed as an objective comparison in that way, this article’s discriminatory rhetoric directly contradicts its criticism of feminizing voice assistants.

AI algorithms are created to “model human processes” (Chandler, 2021). Essentially, that means that any inherent biases humans hold and express “are reproduced by machines” (Søraa, 2023). These biases have routinely been recognized and condemned by researchers. While AI seems to have many negative consequences, there are optimistic outlooks on its usage in the future. Increasing the diversity of people who work in the field of Artificial Intelligence is provided as a solution to the issues of gender inequality in AI (Kohl, 2022, 324). However, regardless of the demographics of the people working in that field, the issue of biased Artificial Intelligence may never be solved. What is considered discriminatory is not always agreed upon, so it would be impossible to entirely eliminate “all types of discrimination” found within AI (Mukherjee, 2023, 1-13).

Furthermore, there is an additional lack of recognition of identities outside of the gender binary within the conversations surrounding AI. Identities outside the gender binary are rarely addressed in articles and other resources. As exemplified, sources that explicitly aim to condemn gender biases ultimately fail to prevent the perpetuation of gender-biased results in AI. Artificial Intelligence has access to sources on the internet, and it may recognize those that condemn current biases as beneficial references to reduce its own bias (Mukherjee, 2023, 1-13). AI generating outputs based on these flawed sources could prove to be increasingly problematic. If AI references sources such as the articles and images mentioned, it will likely mimic the same rhetoric, continuing a cycle of negatively consequential biases against women, as well as those who identify outside of the gender binary. While we may not be fully capable of preventing the biased rhetoric reproduced by AI, researchers can help diminish it by being more mindful of the visuals and language used to discuss gender-related issues within their articles.

Sources

Chandler, K. (2021). Does Military Al Have Gender? Understanding Bias and Promoting Ethical Approaches in Military Applications of Al. Geneva, UNIDIR. https://unidir.org/wp-content/uploads/2023/05/UNIDIR_Does_Military_AI_Have_Gende r.pdf

Beck, M., & Libert, B. (2017, December 19). Could AI Be the Cure for Workplace Gender Inequality? MIT Sloan Management Review. https://sloanreview.mit.edu/article/could-ai-be-the-cure-for-workplace-gender-inequality/ Healey, J. (Ed.). (2020).

Artificial intelligence. The Spinney Press. https://ebookcentral.proquest.com/lib/unc/reader.action?docID=6034013&ppg=6.

Hulick, K. (2015). Artificial intelligence. ABDO Publishing Company. https://ebookcentral.proquest.com/lib/unc/reader.action?docID=5261961&ppg=39.

Kohl, K. (2022). Driving Justice, Equity, Diversity, and Inclusion (1st ed.). Auerbach Publications. https://doi.org/10.1201/9781003168072

McGuire, A. (2018, September 14). Achieving Gender Equality Through AI. Irish Tech News. https://irishtechnews.ie/achieving-gender-equality-through-ai/

Mukherjee, A. (2023). AI and Ethics: A Computational Perspective. IOP Publishing. https://iopscience.iop.org/book/mono/978-0-7503-6116-3.pdf

Ni Loideain, N., & Adams, R. (2020). From Alexa to Siri and the GDPR: The gendering of Virtual Personal Assistants and the role of Data Protection Impact Assessments. Computer Law & Security Review, 36, 105366. https://doi.org/10.1016/j.clsr.2019.105366